RSS discussion paper on model-based ranking of journals, using citation data

This paper has been around on arXiv for quite some time. Now, having survived various rounds of review — and having grown quite a bit as a result of reviewers’ requests! — it will be discussed at an Ordinary Meeting of the Royal Statistical Society on 13 May 2015 (just follow this link to the recent Allstat announcement, for instructions on how to contribute to the RSS discussion either in person or in writing).

Here is the link to the preprint on arXiv.org:

Statistical modelling of citation exchange between statistics journals by Cristiano Varin, Manuela Cattelan and David Firth.

(Note that the more ‘official’ version, made public at the RSS website, is an initial, uncorrected printer’s proof of the paper for JRSS-A. It contains plenty of typos! Those obviously will be eliminated before the paper appears in the Journal.)

The paper has associated online supplementary material (zip file, 0.4MB) comprising datasets used in the paper, and full R code to help potential discussants and other readers to replicate and/or experiment with the reported analyses.

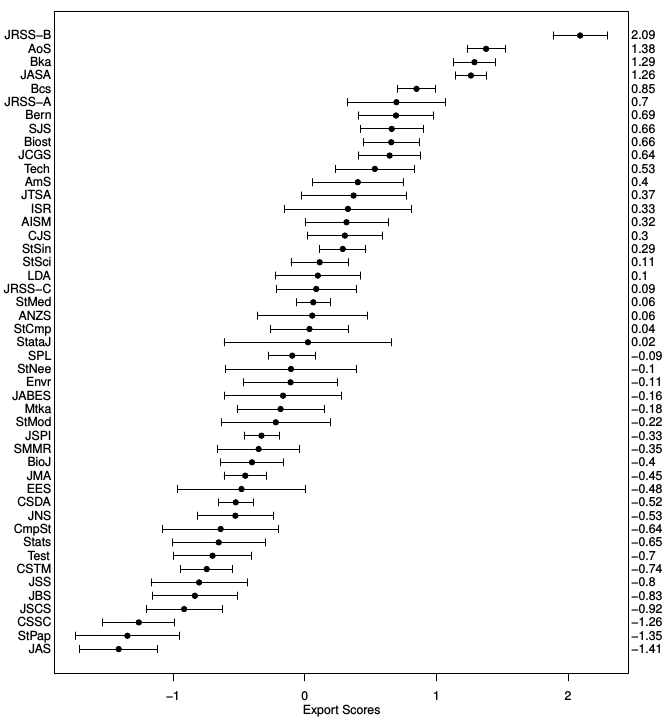

Figure 4 from the paper (a ranking of statistics journals based on the Bradley-Terry model)

The paper’s Summary is as follows:

Rankings of scholarly journals based on citation data are often met with skepticism by the scientific community. Part of the skepticism is due to disparity between the common perception of journals’ prestige and their ranking based on citation counts. A more serious concern is the inappropriate use of journal rankings to evaluate the scientific influence of authors. This paper focuses on analysis of the table of cross-citations among a selection of Statistics journals. Data are collected from the Web of Science database published by Thomson Reuters. Our results suggest that modelling the exchange of citations between journals is useful to highlight the most prestigious journals, but also that journal citation data are characterized by considerable heterogeneity, which needs to be properly summarized. Inferential conclusions require care in order to avoid potential over-interpretation of insignificant differences between journal ratings. Comparison with published ratings of institutions from the UK’s Research Assessment Exercise shows strong correlation at aggregate level between assessed research quality and journal citation ‘export scores’ within the discipline of Statistics.